The Neurosciences and Music-V: Cognitive Stimulation and Rehabilitation

Abstract:

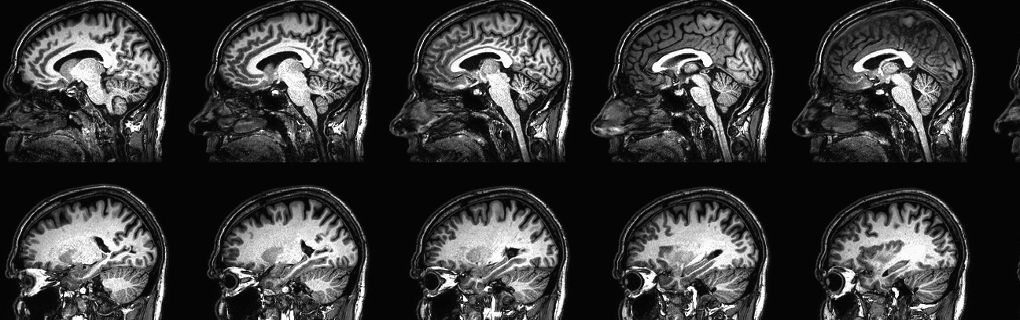

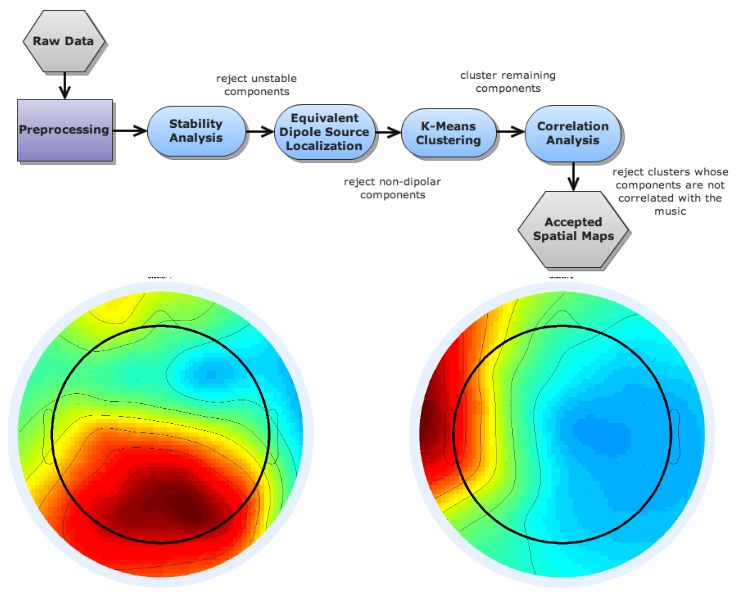

The use of machine learning methods in functional neuroimage analysis has demonstrated an increased sensitivity to cognitive function compared to previously used univariate methods (Kilian-Hütten 2011, Naselaris 2011). This, coupled with the continued progression of cognitive neuroscience research, has led researchers to employ more ecologically valid experimental procedures and more complex stimuli. For example, recent work on stimulus reconstruction has demonstrated a remarkable ability to reproduce natural images (Naselaris 2009), video (Nishimoto 2011), and speech (Pasley 2012) from human brain activity. Stimulus reconstruction results can provide a lower-bound on the information capacity of a given representation of brain activity, which can be useful for testing computational models of neural processing or as a guide for experimental design. However, given the complexity and likely idiosyncrasies in the neural representations of these natural stimuli, large datasets and highly personalized models are required to maximize the expressive capacity of the learned model. Here we present a demonstration of the use of simple, data-driven predictive methods to characterize the relationship between various spectro-temporal audio features extracted from natural musical audio and several hours of bioelectric brain activity collected from an individual during listening.

- Log in to post comments