Proceedings of the International Society for Music Information Retrieval

Abstract:

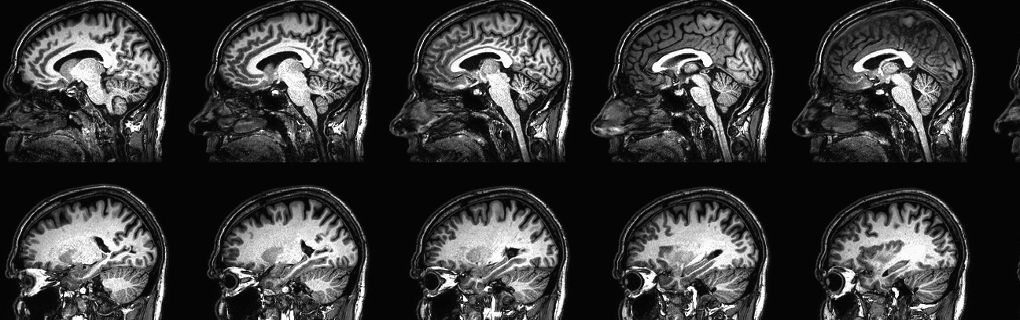

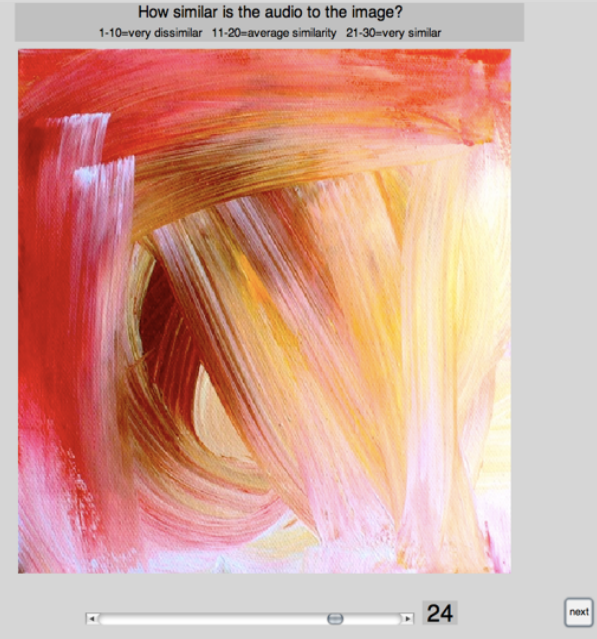

This paper investigates perceptual relationships between art in the auditory and visual domains. First, we conducted a behavioral experiment asking subjects to assess similarity between 10 musical recordings and 10 works of abstract art. We found a significant degree of agreement across subjects as to which i mages correspond to which audio, even though neither the audio nor the images possessed semantic content. Secondly, we sought to find the relationship between audio and images within a defined feature space that correlated with the subjective similarity judgments. We trained two regression models using leave-one-subject-out and leave-one-audio-out cross-validation respectively, and exhaustively evaluated each model's ability to predict features of subject-ranked similar images using only a given audio clip's features. A retrieval task used the predicted image features to retrieve likely related images from the data set. The task was evaluated using the ground truth of subjects' actual similarity judgments. Our results show a mean cross-validated prediction accuracy of 0.61 with p<0.0001 for the first model, and a mean prediction accuracy of 0.51 with p<0.03 for the second model.