ACM Multimedia

Abstract:

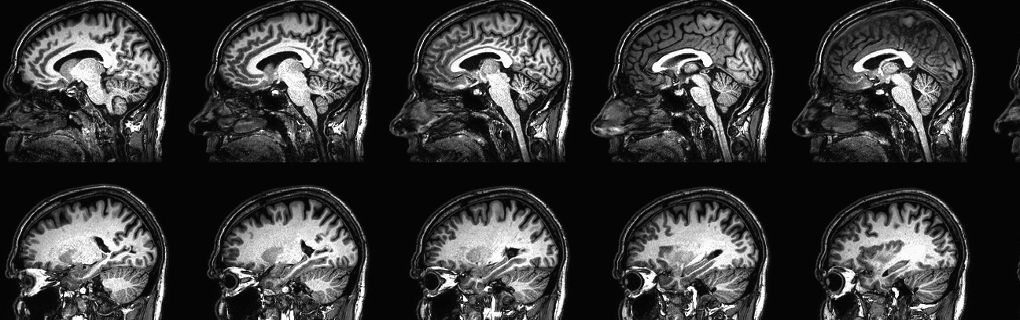

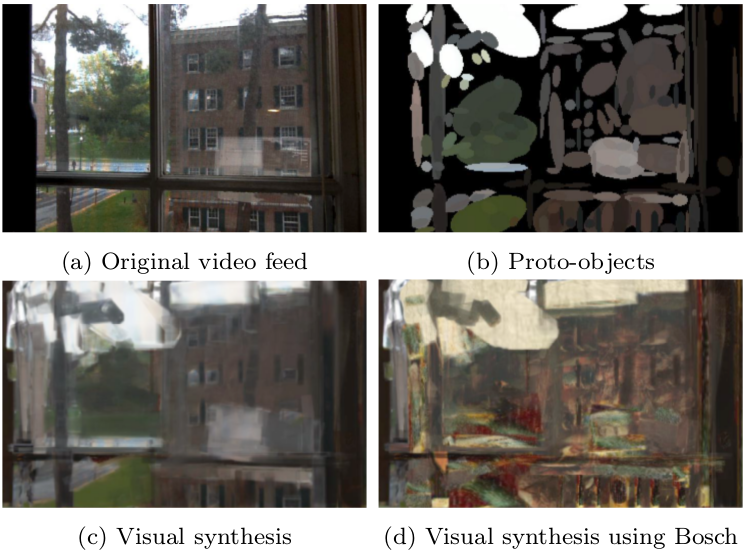

"Resynthesizing Perception" immserses participants within an audiovisual augmented reality using goggles and headphones while they explore their environment. What they hear and see is a computationally generative synthesis of what they would normally hear and see. By demonstrating the associations and juxtapositions the synthesis creates, the aim is to bring to light questions of the nature of representations supporting perception. Two modes of operation are possible. In the first model, while a participant is immersed, salient auditory events from the surrounding environment are stored and continually aggregated to a database. Similarly, for vision, using a model of exogenous attention, proto-objects of the ongoing dynamic visual scene are continually stored using a camera mounted to goggles on the participant's head. The aggregated salient auditory events and proto-objects form a set of representations which are used to resynthesize the microphone and camera inputs in real-time. In the second model, instead of extracting representations from the real world, an existing database of representations already extracted from scenes such as images of paintings and natural auditory scenes are used for synthesizing the real world. This work was previously exhibited at the Victoria and Albert Museum in London. Of the 1373 people to participate in the installation, 21 participants agreed to be filmed and fill out a questionnaire. We report their feedback and show that "Resynthesizing Perception" is an engaging and thought-provoking experience questioning the nature of perceptual representations.

- Log in to post comments