Neural Information Processing Systems (NIPS)

Abstract:

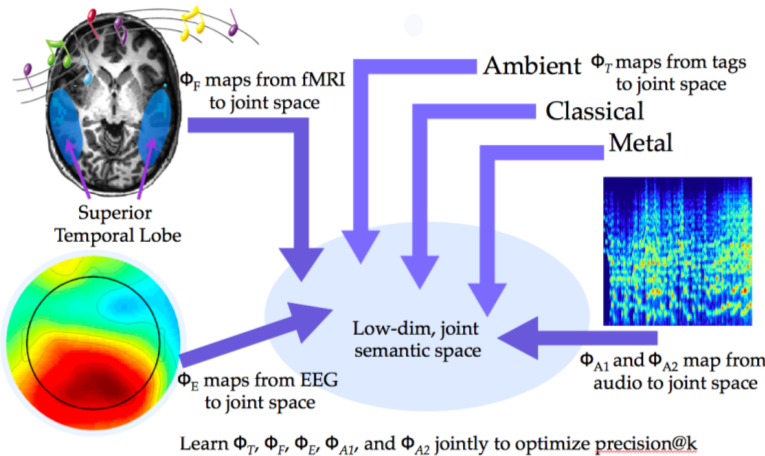

Abstract. The ability to reconstruct audio-visual stimuli from human brain activity is an important step towards creating intelligent brain-computer interfaces and also serves as a valuable tool for cognitive neu-roscience research. We propose a general method for stimulus reconstruc-tion that simultaneously learns from multiple sources of brain activity and multiple stimulus representations. This method adapts the multi-task semantic embedding model [1] to a joint tag-brain-stimulus model, in which several disparate feature spaces are projected into a joint space, anchored by semantic labels. We describe an application of this method to musical audio stimulus reconstruction, using a nearest-neighbor ap-proach within the joint semantic space. We argue that this method has the ability to improve stimulus reconstruction accuracy by incorporating both bottom-up and top-down approaches.

Keywords: stimulus reconstruction, multi-source learning, semantic em-bedding, fMRI, EEG, audio, music, tags

- Log in to post comments